The Chinese AI company, MiniMaxAI, has just launched a large-scale open-source reasoning model, named MiniMax-M1. The model, released on Day 1 of the 5-day MiniMaxWeek event, seems to give a good competition to OpenAI o3, Claude 4, DeepSeek-R1, and other contemporaries. Along with the chatbot, MiniMax has also released an agent in beta version, capable of running code, building apps, creating presentations, and more. In this article, we’ll explore the key features of MiniMax-M1, learn how to access it, and test it out on a few tasks. We’ll also be exploring the MiniMax Agent, so read till the end to watch the agent in action!

Table of Contents

What is MiniMax-M1?

MiniMax‑M1 is an open‑source, large‑scale, hybrid‑attention reasoning model, developed by Shanghai‑based AI startup MiniMax. The thinking model comes with a web search feature and can handle multimodal input in the form of text, images, presentations, and more across various formats.

Built on a Mixture‑of‑Experts (MoE) architecture, the model is trained on a total of 456 billion parameters, with about 45.9 billion activated per token. Moreover, the model is released under an Apache 2.0 license, making it truly open-source.

MiniMax has introduced Lightning Attention for its M1 model, dramatically reducing inference costs. To put it in numbers, it uses just 25% of the FLOPs compared to DeepSeek‑R1 at 100,000‑token generation. The model is trained via large‑scale RL using CISPO (Clipped Importance Sampling Policy Optimization), which clips sampling weights instead of updates. This led to efficient training on 512 A800 GPUs over 3 weeks, costing only around $534,700. This is far lower than the millions spent by competitors like OpenAI and Google.

Key Features

Here are the key features of the new MiniMax-M1 model:

- Hybrid Attention + MoE Efficiency: M1 combines an MoE architecture with Lightning Attention, achieving up to 75% compute savings compared to most other models.

- Large Contect Window: M1’s biggest highlight is its massive context window of 1M input token tokens and upto 80k output tokens, rivaling Google’s Gemini 2.5 Pro.

- Available in 40k & 80k Thinking Budgets: MiniMax offers M1‑40k (intermediate) and M1‑80k (advanced) versions. The 80k variant performs better on extended reasoning and complex tasks.

- Extended Agentic & Long‑Context Reasoning: The models benchmark performances show that it excels at long-context benchmarks (OpenAI‑MRCR, LongBench‑v2) and agentic tool use (TAU‑bench).

Also Read: New Deepseek R1-0528 Update is INSANE

MiniMax‑M1: Benchmark Performance

Here’s how the M1‑80k model stacks up across major benchmark domains:

- AIME 2024: MiniMax-M1 achieved the highest score (86.0%), outperforming all proprietary models like OpenAI o3, Claude 4 Opus, and Gemini 2.5 Pro.

- LiveCodeBench: MiniMax-M1 scored 65.0% in this coding benchmark, just slightly behind OpenAI o3 and Gemini.

- SWE-bench Verified: In this software engineering benchmark, MiniMax-M1 achieved 56.0%, clearly outperforming DeepSeek-R1 and Qwen3 by over 10%.

- TAU-bench: When it comes to agentic tool use, MiniMax-M1 scored 62.8%, beating both DeepSeek and Qwen3. This shows the model’s strong tool-using capability.

- MRCR: The MRCR benchmark tests the models’ skills in multi-round document analysis and long-context reasoning. MiniMax-M1 scored 73.4% on the test, coming close to top commercial models and far ahead of other open-source ones.

Also Read: What are LLM Benchmarks?

How to Access MiniMax‑M1

MiniMax‑M1 is fully open‑sourced and available on both GitHub and Hugging Face. You can also use the model directly on its chatbot interface: https://chat.minimax.io/.

The MiniMax API offers structured function-calling interfaces and other chatbot APIs as well. It also provides tools for search, image/video generation, voice synthesis, and voice cloning – now tailored for agentic workflows.

MiniMax-M1: Hands-on Testing

Now that we know how to access MiniMax-M1, let’s try it out. In this section, I’ll be testing out three different prompts to gauge the performance of the model in:

- Coding an animated simulation

- Searching the web in real-time

- Solving a logical reasoning puzzle

Let’s get started!

Task 1: Animated Simulation

Prompt: “Generate a simulation of a red pentagon, rotating clockwise inside a black hexagon. There are 2 balls moving inside the pentagon – one blue, one yellow – bouncing off of the walls following the laws of Physics. Every time a ball hits an edge, its colour must change. And every time they collide with each other, they must both become the mixture of both their colours.”

Output

Review

In about 10 minutes, MiniMax-M1 generated clean and efficient code with proper explanations to create the simulation. It came out to be visually clear and physically accurate, following the prompt exactly. The balls, however, get stuck at the edges at times, which I found to be an error. Also, the balls eventually get thrown out of the simulation, which I did not expect. But on the whole, I did like the result.

Task 2: Web Search

Now let’s see how well the model can search the web in real-time. In this task, I’m going to ask MiniMax M1 to find me events happening this week in my city. I’ll be turning on the ‘Search’ mode for this task.

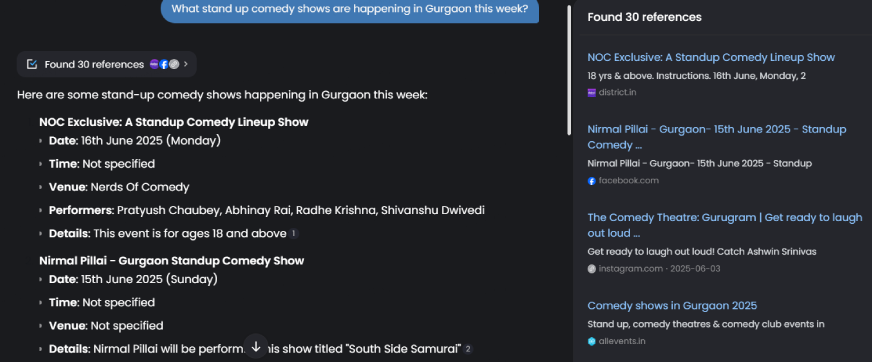

Prompt: “What stand-up comedy shows are happening in Gurgaon this week?”

Output

Review

MiniMax M1 almost immediately searched across 30 different websites including District, All Events, and even event listings on Facebook and Instagram. It gathered all the info and gave me a list of all the stand-up events happening around. The search was done right, but the model was unable to read or detect the timing and venue of the events. However, it listed out all the source websites on the right-hand side, making it easier to access the booking sites.

Task 3: Logical Puzzle

MiniMax M1 is a reasoning model with thinking capabilities. So, I’m going to give it a logical puzzle to solve in this task. I’ll also turn on the ‘think’ mode for this to see the model’s thinking process.

Prompt: “Four people are crossing a bridge at night, so they all need a torch – but they just have one that only lasts 15 minutes. Alice can cross in one minute, Ben in two minutes, Cindy in five minutes and Don in eight minutes. No more than two people can cross at a time; and when two cross, they have to go at the slower person’s pace. How do they get across in 15 minutes?”

Output

“The optimal solution to get all four across in 15 minutes is as follows:

- Alice and Ben cross together (2 minutes).

Time elapsed: 2 minutes

People on far side: Alice, Ben - Alice returns alone (1 minute).

Time elapsed: 3 minutes

People on start side: Alice, Cindy, Don - Cindy and Don cross together (8 minutes).

Time elapsed: 11 minutes

People on far side: Cindy, Don, Ben - Ben returns alone (2 minutes).

Time elapsed: 13 minutes

People on start side: Alice, Ben - Alice and Ben cross together again (2 minutes).

Time elapsed: 15 minutes

All four on far side: Alice, Ben, Cindy, Don

Key strategy: Use the two fastest individuals (Alice and Ben) to shuttle the torch efficiently, minimizing the time slower individuals (Cindy and Don) spend crossing together. This balances the need to move slower individuals while preserving torch time.”

Review

MiniMax-M1 took about 2 minutes (125.2 seconds to be precise) to think about the problem and figure out the solution. The model’s logical thought process was on point, leading to the right answer. It didn’t have to backtrack any of the thoughts, but at some points, it took a bit longer to think.

MiniMax Agent in Action

Now let’s get to the coolest part of MiniMax’s new launches – the MiniMax Agent. Although currently in beta phase, its capabilities and diverse use cases make it quite promising in the world of AI agents. To test out its prowess, I gave it a prompt to build me an interactive website. Here’s how that went.

Prompt: “Build an interactive website featuring a virtual tour of India.

- It must show the country map with the states marked, letting the user read the name of the state and the respective capital city when they hover the mouse over it.

- It must zoom into the chosen state upon clicking, showing all the famous monuments and landmarks as representative icons.

- Again, their names and location (name of city) must be shown when hovered above the icon.

- Upon clicking on an icon, a side panel must pop up showing actual images, the location, visiting times, and entrance fee of the landmark.

- The user must have the option of choosing multiple landmarks, and the app/website must create a realistic itinerary of visiting all those places, also stating how many days it would take to cover them all. This must also take into consideration the commute time based on Google Maps.”

Output

And here’s the website it built for me:

Review

MiniMax Agent did a great job at creating an interactive website to meet my requirements. Although the final website wasn’t exactly how I had explained in my prompt, I must say the results are impressive. The agent found the info, added the text and images, got API keys, accessed Google Maps and other apps, built the whole system, and even tested it – all by itself. It took about 20 minutes to do the whole thing and even provided documentation of the process, test results, and all other details. A free tool doing all of this so well, is just mind-blowing!

You can experience the full site here: https://03w1ujb85t.space.minimax.io/

You can also try out the agent for free by clicking here. Once you sign up using your email ID, MiniMax gives you 1,000 free credits to spend on running the agent.

Conclusion

MiniMax‑M1 represents a major leap in open-source AI. It’s a first-of-its-kind hybrid-attention MoE model, combining scale and compute efficiency. With an astonishing 1M token context window, this new model is capable of long-form reasoning and document understanding. Despite the low training costs, it shows competitive or superior performance across standard benchmarks.

The MiniMax Agent is also quite impressive, being able to create presentations, websites, and apps on its own. The chatbot interface and live updates on the side give users the feeling of vibe coding. On the whole, MiniMax‑M1 sets a new standard in open-source model development. Blending technical sophistication, economic efficiency, and accessibility, it has built a powerful foundation for next-generation AI chatbots and agents. Since it’s free to use for everyone, go ahead, try it out, and let us know in the comments how you find it.